We've officially marked the two-year anniversary of the AI revolution, and momentum continues to build. In fact, generative AI is advancing at an astonishing rate, infiltrating various platforms, formats, and even devices with incredible speed.

Here are 10 key announcements that defined 2024 as a landmark year in artificial intelligence.

OpenAI introduces GPT-4o

When ChatGPT (powered by GPT-3.5) made its debut in November 2022, it showcased a unique ability to parse and generate language in an innovative manner. While this was a groundbreaking achievement at the time, it wasn't until the rollout of GPT-4o in May 2024 that generative AI systems truly evolved to a new level.

Building on its prior capabilities to not only analyze but also generate text and images, GPT-4o boasts a significantly enhanced contextual understanding compared to GPT-4. This improvement allows for superior performance in various tasks, including image captioning, visual analysis, and the creation of both creative and analytical outputs such as graphs and charts.

Advanced Voice Mode allows machines to interact like humans

In September, OpenAI solidified its position as a leader in artificial intelligence by launching Advanced Voice Mode for ChatGPT subscribers. This feature eliminates the need for typed inputs, allowing users to engage in natural, conversational exchanges with the AI.

Leveraging the rapid response capabilities of GPT-4o, Advanced Voice Mode has transformed human-computer interaction, unlocking the full creative potential of the AI.

Generative AI reaches the edge of technology

Since ChatGPT’s launch in 2022, the landscape for AI has dramatically evolved. Today, generative AI is integrated across a diverse array of devices, from smartphones to smart home gadgets and even autonomous vehicles. ChatGPT can now be accessed via desktop apps, APIs, mobile applications, and even through traditional phone lines. Microsoft has taken the lead by embedding AI directly into its Copilot+ laptops.

A notable push came from Apple, which, despite a somewhat rocky launch, aimed to make generative AI more accessible than ever.

No matter how the respective launches turn out, the advancements made by Copilot+ laptops and Apple Intelligence illustrate just the beginning of this technological progression.

Nuclear energy experiences a comeback

Prior to this year, nuclear power was viewed skeptically in the U.S., often considered unreliable and dangerous, largely due to the Three Mile Island accident in 1979 that released radioactive material into the atmosphere. However, with the growing demand for electricity from modern AI models—along with the strain they place on power grids—many leading tech companies are reconsidering the use of nuclear energy for powering their data centers.

Amazon, for instance, acquired a nuclear-powered AI data center from Talen in March and later announced a deal for small, modular reactors from Energy Northwest in October. Likewise, Microsoft has secured a power agreement with the infamous Three Mile Island plant, working to reactivate Reactor One to contribute to electricity generation.

Agents are set to revolutionize generative AI

The AI sector learned that there are limits to how much data, computational power, and resources can be poured into enhancing large language models before facing diminishing returns. This realization in 2024 prompted a shift from the expansive LLMs that have defined the generative AI landscape towards "Agents"—more nimble, task-specific models designed for efficiency.

Anthropic introduced its agent, named Computer Use, in October, while Microsoft unveiled its Copilot Actions in November. OpenAI is also expected to launch its agent feature in January.

The emergence of reasoning models in AI

Many contemporary large language models prioritize providing speedy responses, often compromising on accuracy. OpenAI’s o1 reasoning model, previewed in September and fully launched in December, takes a different approach: it prioritizes accuracy over speed, meticulously verifying the logic behind its responses to ensure completeness.

Although this model hasn’t reached widespread adoption yet (o1 is accessible only to Plus and Pro subscribers), key players in AI are advancing similar technologies. On December 19, Google revealed its version, called Gemini 2.0 Flash Thinking Experimental, while OpenAI has already hinted at an o1 successor, termed o3, during its live-stream event on December 20.

AI-enhanced search capabilities proliferate online

With generative AI becoming omnipresent, its integration into fundamental internet functions was inevitable. Google has been refining this technology for over two years, first releasing the Search Generative Experience in May 2023 and subsequently launching the AI Overview feature, which summarizes requested information atop search results.

Taking it a step further, Perplexity AI provides an “answer engine” that searches the web for data, synthesizing it into a cohesive, conversational response that cites its sources, thus removing the need to sift through numerous links. OpenAI mirrored this approach with its own system for ChatGPT, known as ChatGPT Search, which was announced in October.

Anthropic’s Artifact sparks a new collaborative movement

Working with lengthy documents—be it comprehensive essays or snippets of computer code—within a chat interface can be daunting, often requiring extensive back-and-forth navigation to view a complete document.

Anthropic's Artifact feature, unveiled in June, alleviates this challenge by offering a dedicated preview window for users to examine AI-generated text outside of the primary chat. The feature gained such popularity that OpenAI rapidly introduced a similar option.

With its latest innovations, Anthropic has emerged as a strong competitor to OpenAI and Google this year, marking a significant shift in the landscape.

Image and video generators master human features

Use Camera Control to direct every shot with intention.

Learn how with today’s Runway Academy. pic.twitter.com/vCGMkkhKds

— Runway (@runwayml) November 2, 2024

In the past, identifying AI-generated content was easy, as evidenced by their frequent anatomical glitches—too many limbs, for instance. However, as we approach the end of 2024, distinguishing between human-created and AI-generated works has become increasingly challenging. Tools for image and video generation, such as Kling, Gen 3 Alpha, and Movie Gen, now produce strikingly realistic footage with precise control, while Midjourney, Dall-E 3, and Imagen 3 have excelled at crafting hyper-realistic still images with minimal errors.

Moreover, OpenAI’s Sora made headlines in December, adding to the competitive landscape of AI-generated visual technologies.

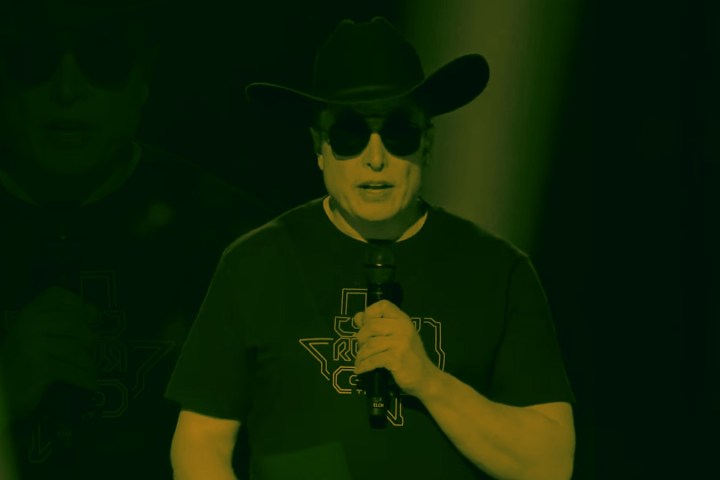

Elon Musk invests $10 billion in a premier AI training facility

xAI launched Grok 2.0 this year, which is seamlessly integrated into X. However, the most significant development regarding Elon Musk's AI initiative is the creation of the "world's largest supercomputer" near Memphis, Tennessee, which went live at 4:20 a.m. on July 22. This supercluster, powered by 100,000 Nvidia H100 GPUs, is set to train the next iterations of xAI's Grok generative models, which Musk asserts will become "the most powerful AI globally."

In 2024, Musk is expected to allocate around $10 billion for infrastructure and operational expenses, with plans to double the GPU count in the facility in the upcoming year.