Imagine a leading tech company seeking to use your posts from Instagram and Facebook to enhance its AI models, with no incentives offered in return. Although they claim you have the option to opt out, users are discovering that the mechanisms to do so simply don’t function.

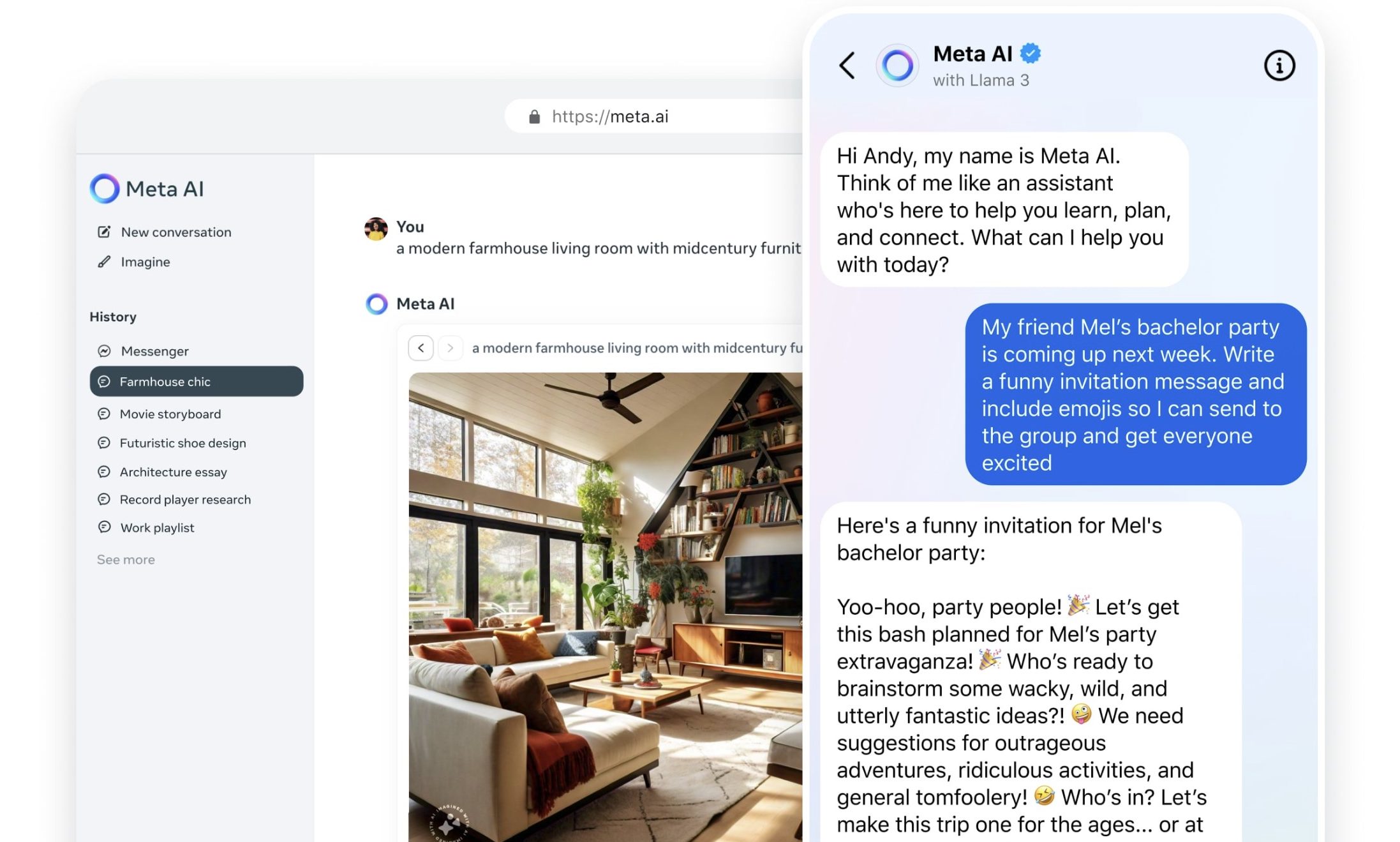

Recently, Facebook and Instagram users have voiced concerns about this issue. Nate Hake, founder and publisher of Travel Lemming, shared that he received an email from Meta on this topic. However, the link provided to opt out was non-functional.

Compounding the problem, after reaching out to Meta for clarity on his request, Hake was informed that the company could not accommodate his request. He simply received a lackluster response indicating they would take no further action.

What is going on?

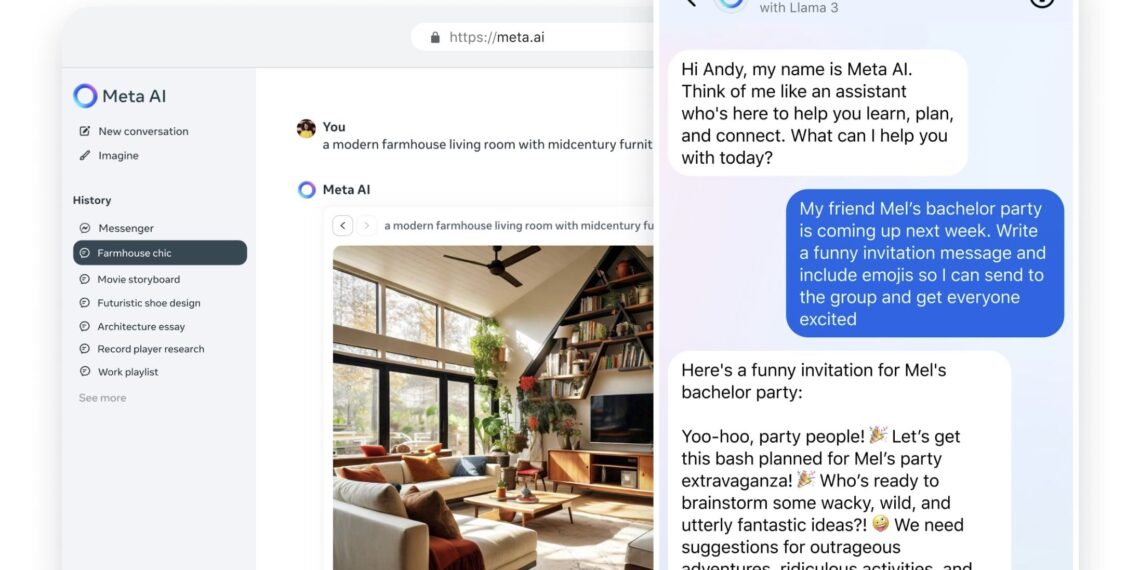

Back in 2018, when Meta was still known as Facebook, the company disclosed its intention to train AI algorithms using the vast number of photos shared on Instagram. At that time, the Meta AI and Llama AI models had not yet been established, but this foreshadowed future challenges.

Fast forward to the present, Meta is heavily investing in AI technology, which relies on vast amounts of data for training. Users of its platforms are now recognizing that their content is being utilized for this purpose, as the opt-out options seem ineffective.

For years, Meta has been repurposing user-generated content, yet has faced significant scrutiny due to copyright concerns and privacy issues. As a result, the company paused plans to train its AI on data from users in the EU as of last June.

However, less than a year later, Meta announced it would resume collecting user content—including photos, videos, comments, and chats—for AI training from users in the EU and the UK. The company asserted this is merely continuing a global standard practice.

“We’re following the examples set by others, like Google and OpenAI, who have already leveraged data from European users to train their AI models,” the company stated in an official blog post.

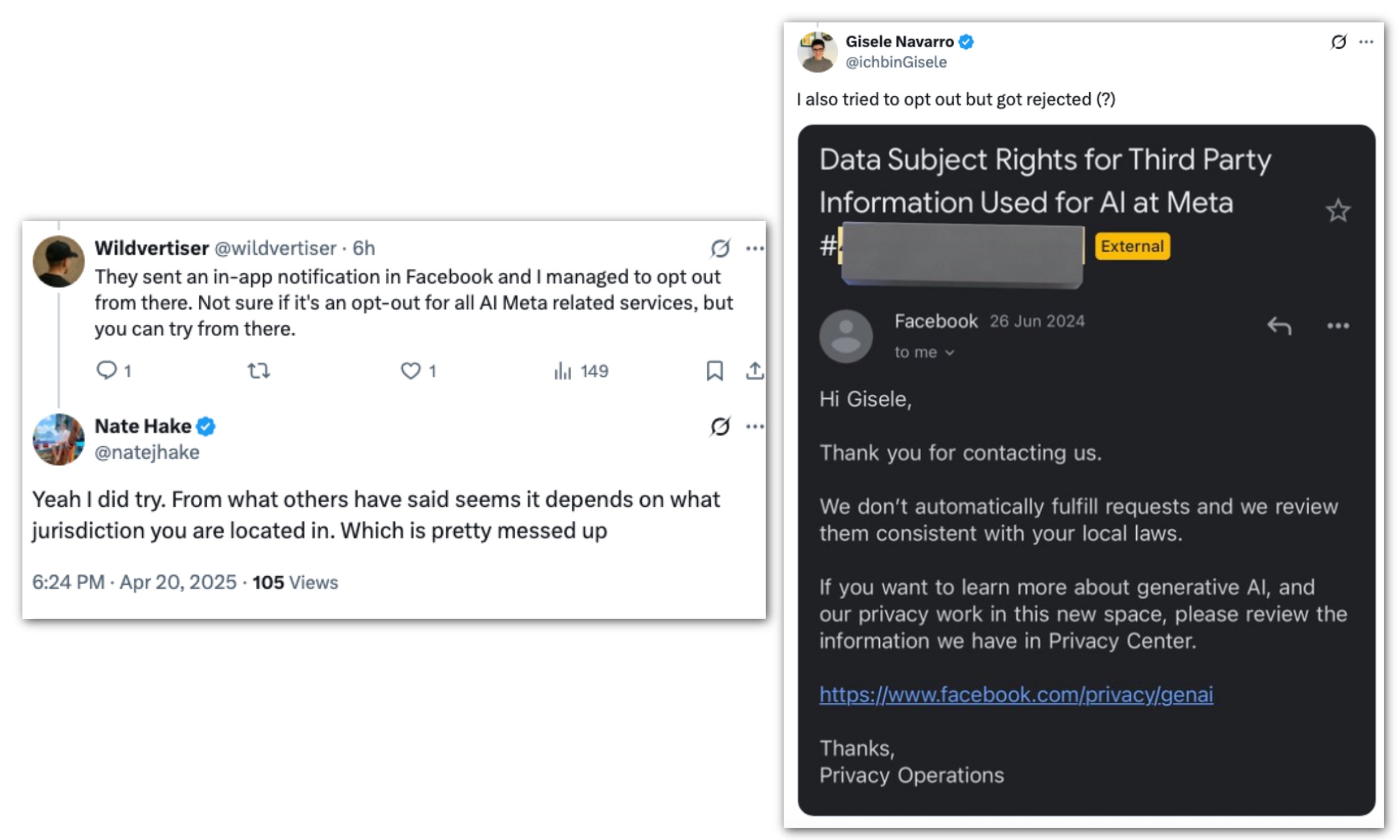

Allow them to opt out, if possible

In mid-April of this year, Meta announced that it would update users in the EU and the UK about its revised AI training policies. This message would reach users through both in-app notifications and official emails.

“These notifications will also include a link to a form, allowing individuals to object to their data being utilized in this manner at any time,” the company assured. Meta emphasized that the form would be easily accessible and written in clear language.

Nate Hake reported that the opt-out link provided by Meta was failing to function. Others responding to his post echoed similar experiences, criticizing Meta for its seemingly dubious practices. It remains unclear whether this malfunctioning link is a technical error or something more deliberate.

Hake has highlighted the lack of consideration for user autonomy and the apparent reversal on the company's commitments. Despite reassurances from Meta just over a week earlier that they would “honor all objection forms submitted, including those currently on file,” Hake's attempts to engage on this matter received a response indicating that they would not be taking any action.

While Meta professes to support transparency concerning the use of social content for AI training, the reality appears to indicate a gap between their promises and actual practices.