Google's artificial intelligence initiatives are embodied in Gemini, a vital component of its widely used software and hardware suite. Additionally, the company has been publishing various open-source AI models under the Gemma branding for over a year.

Recently, Google announced its third version of open-source AI models, boasting impressive capabilities. The Gemma 3 models come in four configurations — 1 billion, 4 billion, 12 billion, and 27 billion parameters — and are tailored for devices ranging from smartphones to powerful workstations.

Optimized for Mobile Devices

According to Google, Gemma 3 is hailed as the world's top single-accelerator model, allowing it to operate on a single GPU or TPU rather than necessitating a complete network of machines. This capability theoretically enables a Gemma 3 AI model to run directly on the Tensor Processing Core (TPU) of a Pixel smartphone, similar to how it utilizes the Gemini Nano model locally on mobile devices.

The primary benefit of Gemma 3 over its Gemini counterparts is its open-source nature. This allows developers the flexibility to integrate and customize it as per the requirements of both mobile and desktop applications. Furthermore, Gemma supports over 140 languages, with 35 languages included in its pre-trained package.

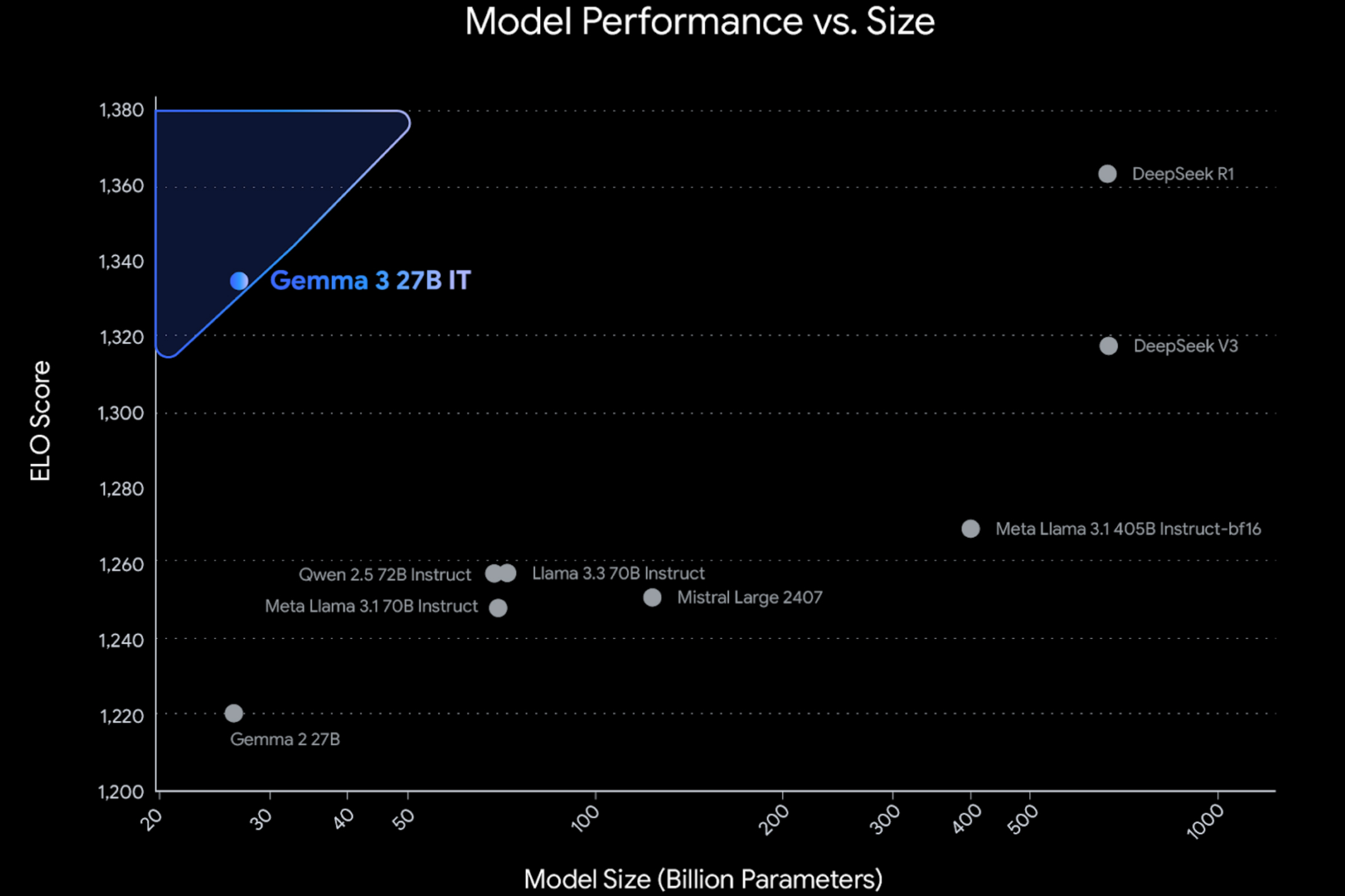

Like the recent Gemini 2.0 models, Gemma 3 can process text, images, and videos, showcasing its multimodal capabilities. Performance-wise, Gemma 3 is reported to outperform other well-known open-source AI models, including DeepSeek V3, OpenAI's reasoning-enabled o3-mini, and Meta's Llama-405B variant.

Flexible and Easy to Implement

Gemma 3 boasts a context window of 128,000 tokens, sufficient to encapsulate an entire 200-page book as input. In comparison, Google's Gemini 2.0 Flash Lite model supports a million tokens. In the realm of AI models, an average English word corresponds to roughly 1.3 tokens.

Additionally, Gemma 3 supports function calls and structured outputs, enabling interactions with external datasets and functioning like an automated agent. This feature is similar to how Gemini operates across various platforms like Gmail or Docs.

The latest open-source AI models from Google can be deployed locally or via the company’s cloud services, such as the Vertex AI platform. The Gemma 3 AI models are accessible through Google AI Studio as well as third-party repositories like Hugging Face, Ollama, and Kaggle.

Gemma 3 is part of a broader industry trend, where companies are developing large language models (in Google's case, Gemini) while simultaneously unveiling smaller language models. Similarly, Microsoft is adopting this approach with its open-source Phi series.

Small language models like Gemma and Phi are extremely efficient in resource consumption, rendering them ideal for execution on devices such as smartphones. Additionally, they provide lower latency, making them particularly suitable for mobile application use.