The introduction of Meta’s AI assistant on WhatsApp has sparked a wave of dissatisfaction among users, particularly in Europe, where many are finding themselves unable to turn off or eliminate this new feature, according to reports from BBC.

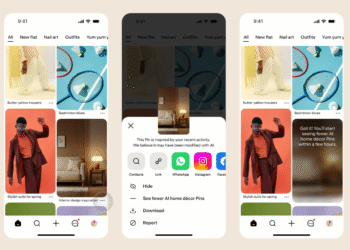

Identified by a constant blue circle in the chat interface, the AI assistant is integrated into the platform and is visible by default. This has raised significant concerns regarding user autonomy and control.

While Meta asserts that using the AI is “completely optional,” numerous users have reported that it activates automatically and lacks a straightforward way to be disabled. Although the assistant can respond to inquiries, generate images, and provide information, many users consider its involuntary incorporation as an invasion of privacy.

Privacy advocates have expressed strong disapproval of this move. Some experts contend that individuals should never be compelled to use AI and worry that the data used to train Meta’s models could jeopardize user privacy.

Additionally, critics have highlighted that some of the training data may include personal information or pirated content, further raising concerns about potential data misuse.

Meta insists that personal conversations on WhatsApp remain end-to-end encrypted, asserting that interactions with the AI assistant are handled separately. However, they have not made an explicit option available for users to opt out completely. This lack of transparency and control has intensified worries about the future of AI in messaging applications.

Users, alongside privacy experts, are demanding increased accountability and the option to disable AI functionalities. This controversy contributes to ongoing global dialogues about consent, data ethics, and the role of AI in daily communication.