The tech giant Microsoft recently unveiled its brand-new artificial intelligence (AI) technology.

Table of Contents

VALL-E

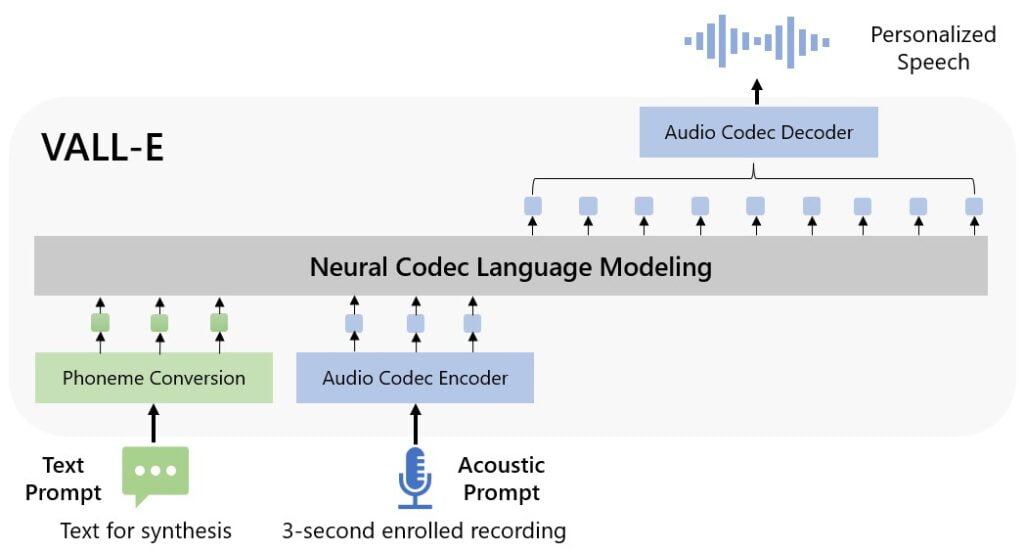

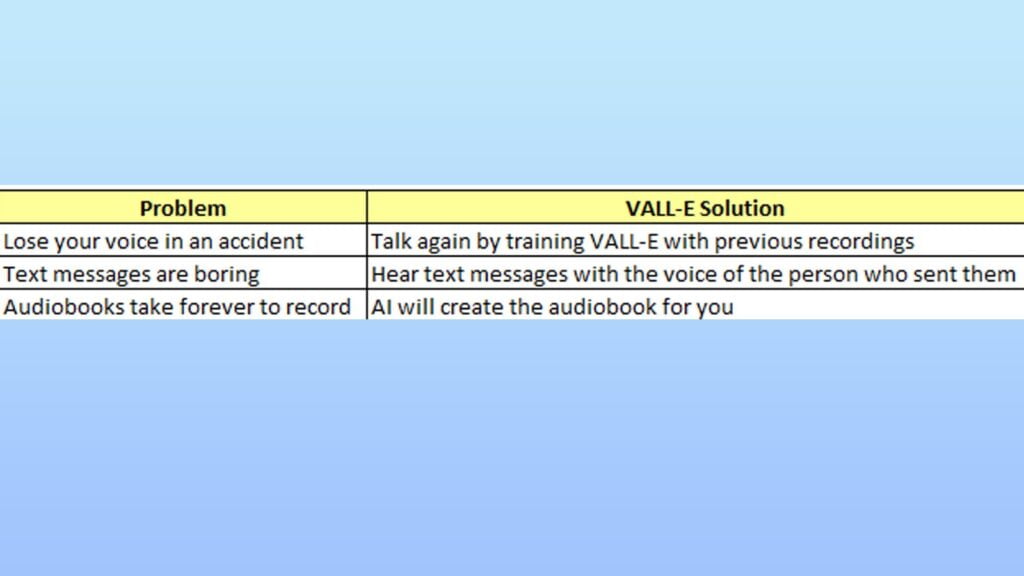

Named VALL-E, this AI model is said to be able to analyze and replicate people’s voices by only needing a 3-second recording of a certain voice to imitate it. When a certain voice has successfully been simulated, VALL-E can change the intonation to the emotional tone of that person.

Its creators explain that the VALL-E was trained with 60,000 hours of English speech from over 7,000 different speakers in an effort to get it to produce human-sounding speech.

Copy any voice in 3-seconds

Researchers who developed VALL-E explained that this technology could be used in text-to-speech applications to produce voices based on prepared transcripts. So, its user only needs to prepare a script containing whatever he wants to convey without having to record his voice manually. It is considered quite helpful for activities such as editing speeches and creating audio content.

VALL-E can be dangerous

Although the potential for artificial intelligence may vary, many people are concerned about the negative impact that VALL-E could possibly bring up. VALL-E carries the risk of abuse which could criminalize its users, such as if the technology is used to fake voice identification or impersonate certain speakers.

For example, this AI was used to fake the voice of a famous person saying something they had never said. This type of case has been found in deepfakes in video format.

In addition, it is almost similar to Lensa AI, which was recently considered to violate art ethics because it is feared that it can replace human artists by making digital art.

Music producers using it to copy the voices of singers

VALL-E also raises ethical questions, such as when AI is used by music production companies to sing new songs without the consent of the singer owning the voice.

Realizing the concerns and possible risks that the robot might pose, Microsoft, as the developer, only publishes the capabilities and workings of the AI model without sharing the source code.

VALL-E’s researchers say they will try to build a measurement mechanism that can prevent such risks, such as a method that can detect an audio clip engineered by VALL-E or the original sound.