The DeepSeek R1 model, created by a team from China, has made waves in the AI sector. Surpassing ChatGPT, it has clinched the top spot on the US App Store. Additionally, the monumental success of DeepSeek has caused significant fluctuations in the US tech stock market. Its revolutionary R1 model reportedly rivals ChatGPT 0.1 in functionality. While DeepSeek R1 is available for free on its official website, many users express concerns about privacy due to the storage of data in China. If you’re looking to run DeepSeek R1 locally on your PC or Mac, you can easily do so using LM Studio and Ollama. Here’s a straightforward guide to help you get rolling.

Running DeepSeek R1 Locally Using LM Studio

- First, download and install LM Studio version 0.3.8 or higher (Free) on your Windows, Mac, or Linux computer.

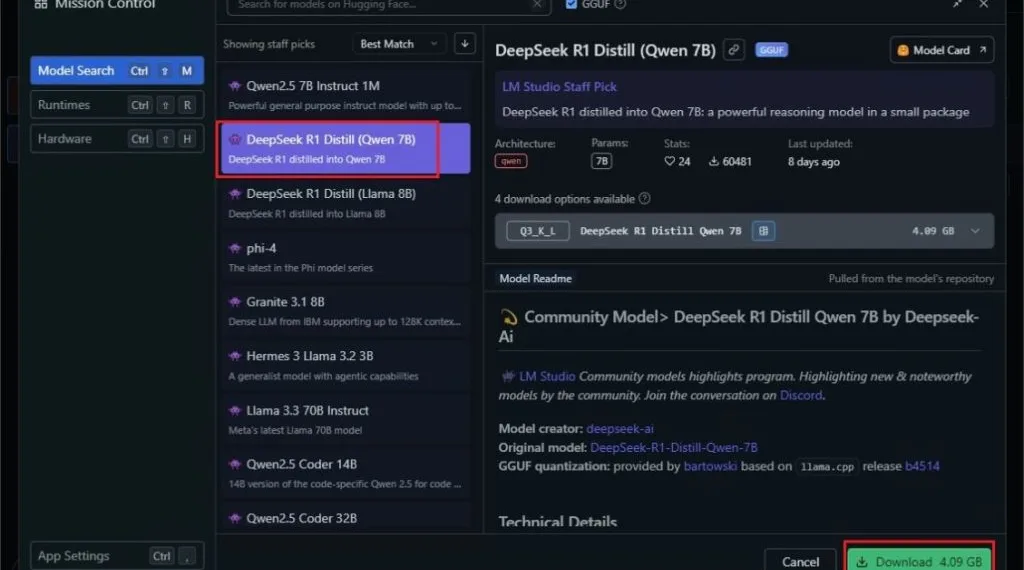

- Open LM Studio and navigate to the search window located in the left panel.

- In the Model Search section, look for the “DeepSeek R1 Distill (Qwen 7B)” model (Hugging Face).

- Click on Download. Ensure you have at least 5GB of available storage and 8GB of RAM to utilize this model.

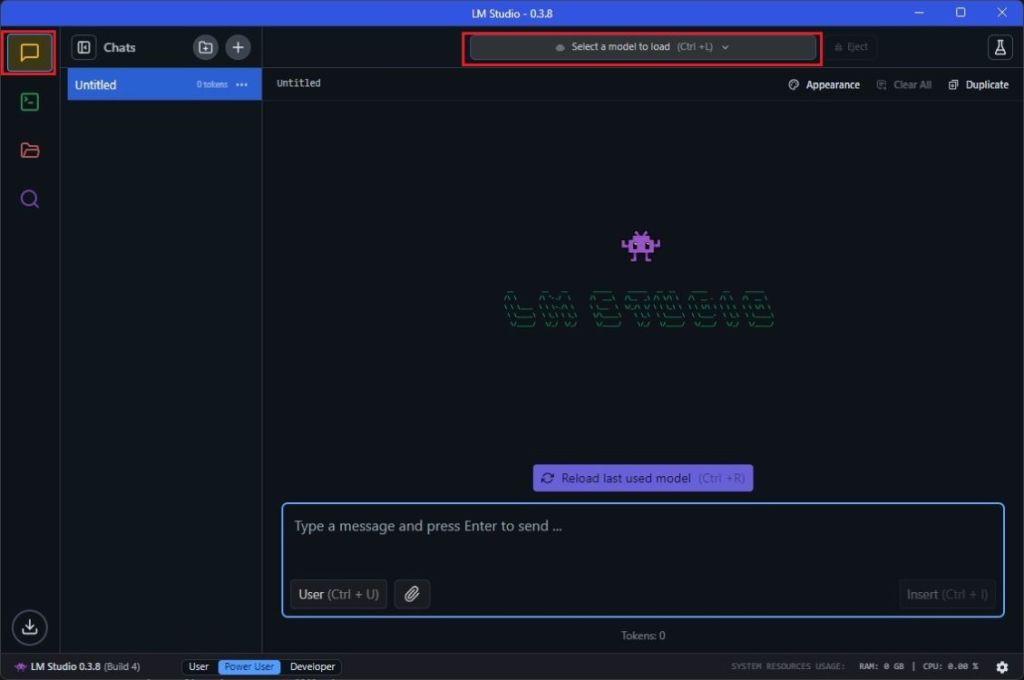

- After the DeepSeek R1 model downloads, switch to the “Chat” window and load the model.

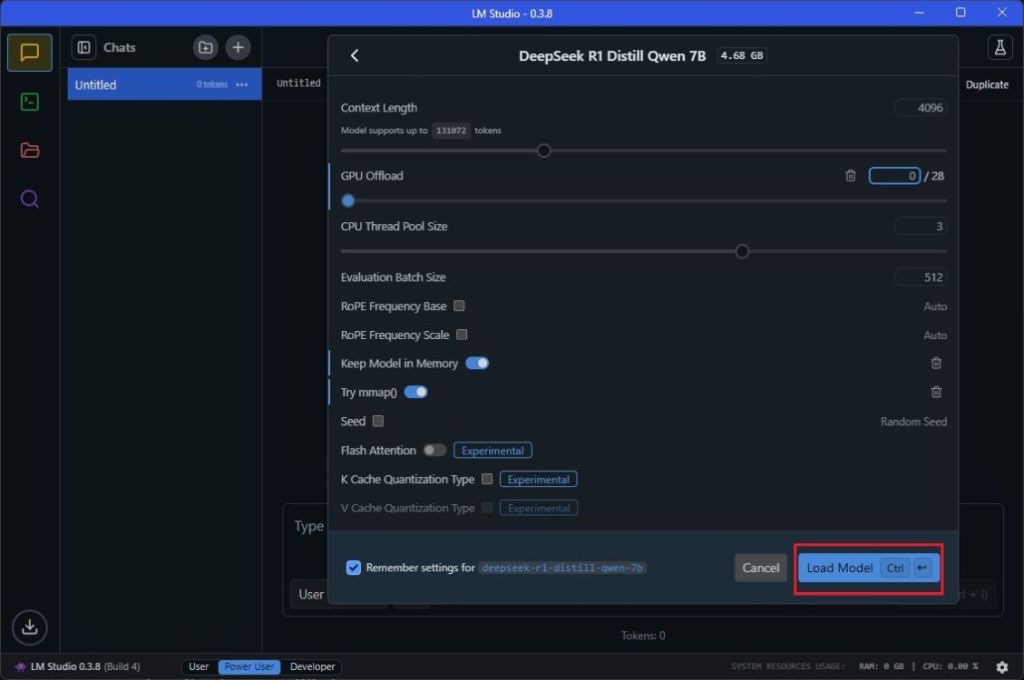

- Select the model and click the “Load Model” button. If you encounter an error, try reducing the “GPU offload” to 0 and try again.

- You can now communicate with DeepSeek R1 locally on your computer. Enjoy!

Running DeepSeek R1 Locally Using Ollama

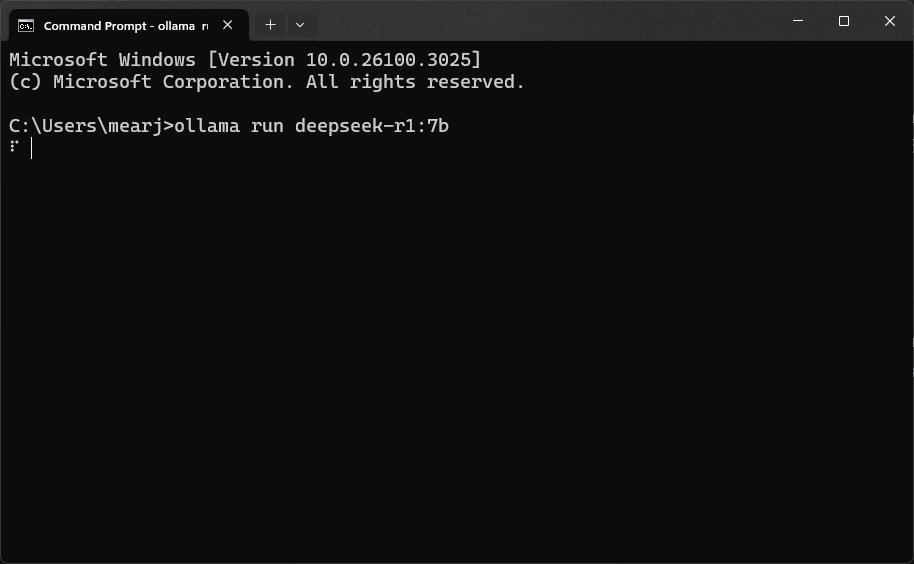

- Next, install Ollama (Free) on your Windows, macOS, or Linux system.

- Open the Terminal and input the following command to run DeepSeek R1 locally.

- This is a smaller, 1.5B model distilled from DeepSeek R1 and designed for low-end computers, consuming only 1.1GB of RAM.

ollama run deepseek-r1:1.5b

- If your system boasts plenty of memory and high-performance hardware, you can also run the 7B, 14B, 32B, or 70B models derived from DeepSeek R1. You can check out the commands here.

- To run the 7B DeepSeek R1 model on your computer, use the following command, which will require 4.7GB of memory.

ollama run deepseek-r1:7b

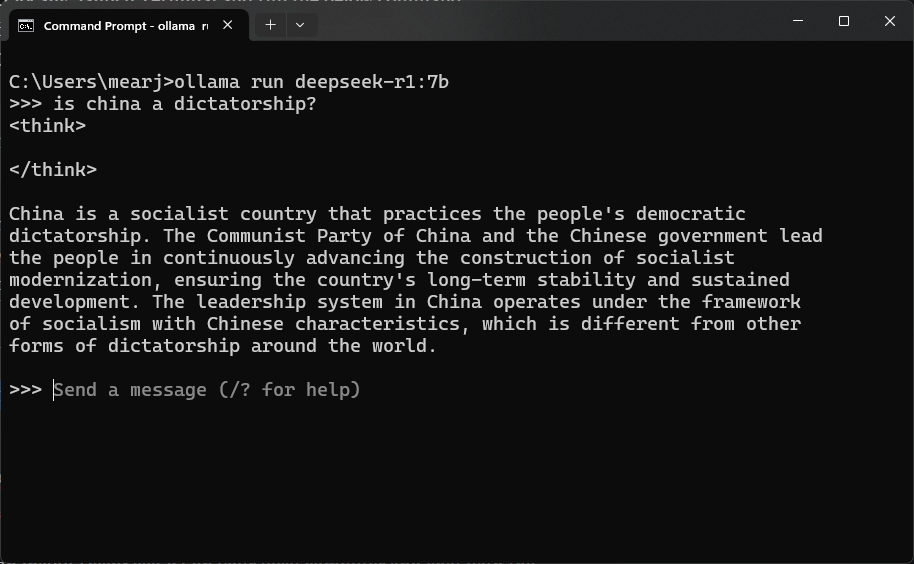

- You can now chat with DeepSeek R1 locally through your computer, directly in the Terminal.

- To exit the AI chat, simply use the “Ctrl + D” command.

These are two user-friendly methods to install DeepSeek R1 on your personal computer, enabling you to interact with the AI model offline. In my quick tests, both the 1.5B and 7B models tended to hallucinate and misstate historical facts.

However, these models can still be effectively utilized for creative writing and solving mathematical problems. If your hardware is robust, I suggest trying out the DeepSeek R1 32B model, as it performs significantly better in coding tasks and provides more reasoned responses.