Meta Unveils Breakthrough in Robot Tactile Perception: Harnessing Finger Sensors and AI to Understand and Manipulate Objects

Meta has announced significant advancements in the field of robotic tactile perception, highlighting research that integrates advanced finger sensors with artificial intelligence to enable robots to understand and interact with their surroundings in a more sophisticated way.

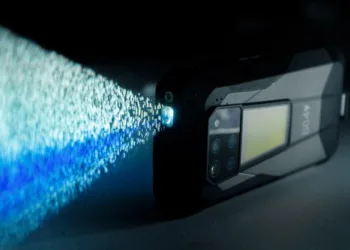

The company’s latest findings demonstrate how the combination of sensory technology and AI can mimic human-like touch, allowing robots to accurately perceive and manipulate various objects. This innovative approach involves the use of sensors placed on robotic fingers, which gather detailed information about pressure, texture, and shape. The data is then processed by an AI “brain” that interprets the sensory input, enabling the robotic hands to perform complex tasks with precision.

This development is seen as a major step forward in robotics, with potential applications in areas such as manufacturing, healthcare, and personal assistance. By equipping robots with a heightened sense of touch, Meta aims to enhance their ability to perform delicate operations and improve their overall functionality in real-world scenarios.

Meta’s research underscores the growing intersection of robotics, sensory technology, and artificial intelligence, presenting a vision for the future where machines can interact with the physical world in a more human-like manner. As the technology continues to evolve, the company anticipates that these advancements will pave the way for a new generation of robots capable of conducting intricate tasks alongside humans.