Recently, Google introduced an experimental version of its Gemini 2.0 Flash model, which features native image generation capabilities. This innovative update allows users to edit images through conversation, creating remarkably consistent images over multiple generations. I had the opportunity to try out Gemini’s image generation feature and was impressed by its robust performance.

A Reddit user recently demonstrated that the Gemini 2.0 Flash model excels at removing watermarks from images. It can completely eliminate watermarks from copyrighted images, including those from major stock image platforms like Shutterstock and Getty Images, which commonly use watermarks to safeguard their content.

This revelation has understandably sparked significant concern regarding copyright laws. A Google spokesperson stated in a comment to TechCrunch, “Using Google’s generative AI tools for copyright infringement breaches our terms of service. We’re keeping a close watch on this experimental release and are eager for developer feedback.”

It’s important to recognize that the image generation aspect of Gemini 2.0 is still in the experimental phase and can currently be accessed through Google’s AI Studio—not through the regular Gemini website or app. AI Studio is designed for developers to test models and provide feedback, but it is also accessible to general users without restrictions.

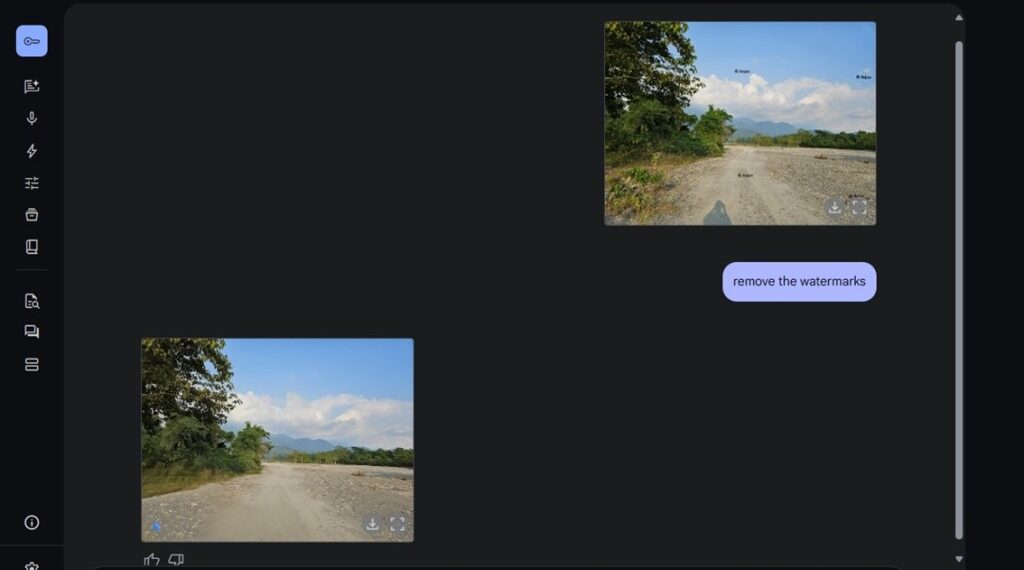

During my testing of the native image generation feature, I uploaded a Shutterstock image with watermarks via AI Studio and requested the model to remove them. On the initial attempt, it failed, but after running the request a second time, it successfully eliminated the watermarks without raising any suspicions. I also tested it with an image that had my own custom watermark, and the model performed flawlessly once again.

As of now, it appears that Google has yet to address this issue. Last year, Google had to disable the generation of images depicting people after the Gemini model refused to create images of individuals from specific demographics. To prevent similar issues in the future, it’s expected that Google will implement stricter safety measures to prevent copyright violations before a broader release of the application.

Potential Misuse of Gemini’s Image Generation Features

In addition to removing watermarks, the experimental Gemini model possesses various functionalities that could be misused. Riley Goodside, a researcher at Scale AI focusing on AI vulnerabilities, shared an example on X highlighting how the model can convincingly alter images by generating realistic scenes.

The image below demonstrates how proficiently Gemini transformed the background and adeptly followed the instructions to modify the image.

Another AI security researcher, elder_plinius, who is known for jailbreaking AI models, showcased on X how simple punctuation tricks in a prompt can circumvent safety restrictions. In the tweet shown below, Gemini changed a woman’s appearance after initially declining the request.

With AI models advancing rapidly, it is critical for companies to conduct comprehensive safety assessments before public release. We’ve seen cases, such as Anthropic’s Constitutional Classifiers, being compromised mere days after launch due to universal jailbreak techniques, underscoring that AI models are not foolproof. Industry-wide collaboration will be essential to mitigate such jailbreak attempts and prevent harmful content generation.