Google’s AI Overview isn’t backing down from the occasional AI-related mix-up, and its most recent blunder has become a classic example.

AI Overview Thinks Everything Is An Idiom, and It’s Entertaining

Essentially, if you go to Google Search and type in a random sentence that sounds somewhat like an idiom or saying, the AI will bend over backward to give meaning to those empty words.

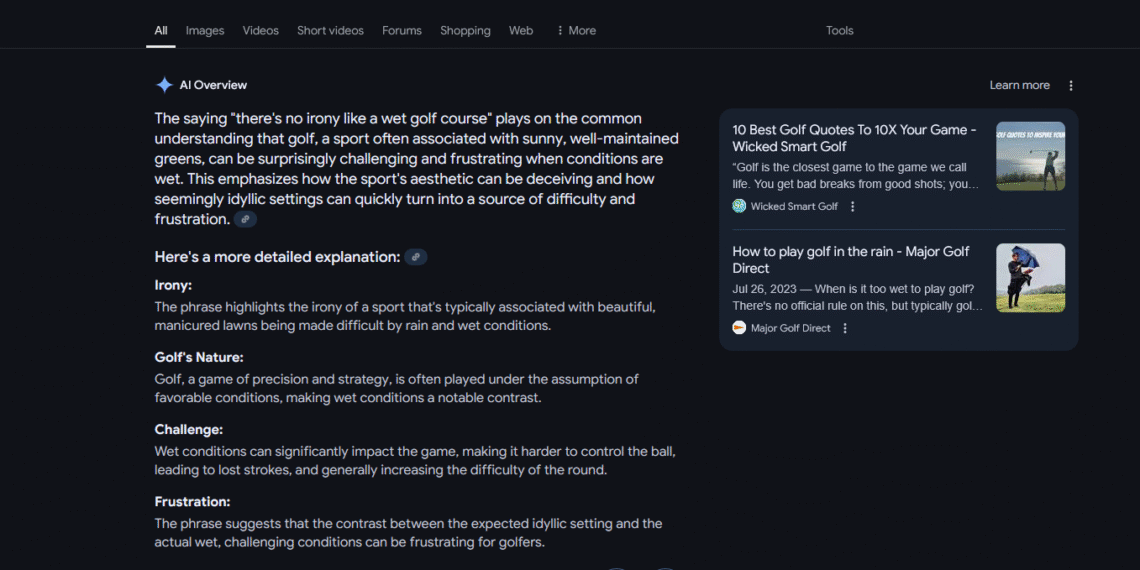

I first came across this on Threads, but it was highlighted by Greg Jenner on Bluesky. These AI mix-ups have quickly become some of my favorites. There are some fantastic examples showing just how far Google’s AI Overview will go to make sense of something and fit it into a narrative. One standout example came from Ben Stegner, the Editor in Chief at MakeUseOf, who stated: “There’s no irony like a wet golf course means.”

To which the AI Overview replied, “The expression ‘there’s no irony like a wet golf course’ plays on the common belief that golf, a game typically associated with sunny, well-manicured greens, can be unexpectedly difficult and frustrating when conditions are damp.”

Another amusing attempt involved the phrase “giant pandas always fall twice.” The AI didn’t just stop there; it elaborated on how clumsy pandas are and how they enjoy rolling around rather than walking. But it went further, diving into pandas’ metabolism and how they conserve energy.

AI Overview’s Latest Slip-Up Shows Why AI Chatbots Aren’t Reliable

While these quirky, forced explanations can be entertaining, they underscore a significant issue with AI chatbots (not only AI Overview). AI hallucinations are genuine problems and can be especially problematic if the results are taken at face value.

When AI hallucinations were primarily an issue for people using dedicated AI chatbots like ChatGPT, Claude, or Gemini, the potential problems were somewhat contained. Yes, the AI made mistakes, but those users were intentionally engaging with AI technology.

Now, with Google’s AI Overview and its forthcoming version, AI Mode, the stakes have changed. Anyone trying to use Google for standard searches risks encountering misleading, AI-generated responses presented as facts. Without careful evaluation, Google Search as we know it could be supplanted by something much less reliable, necessitating a higher level of critical thinking than ever before.

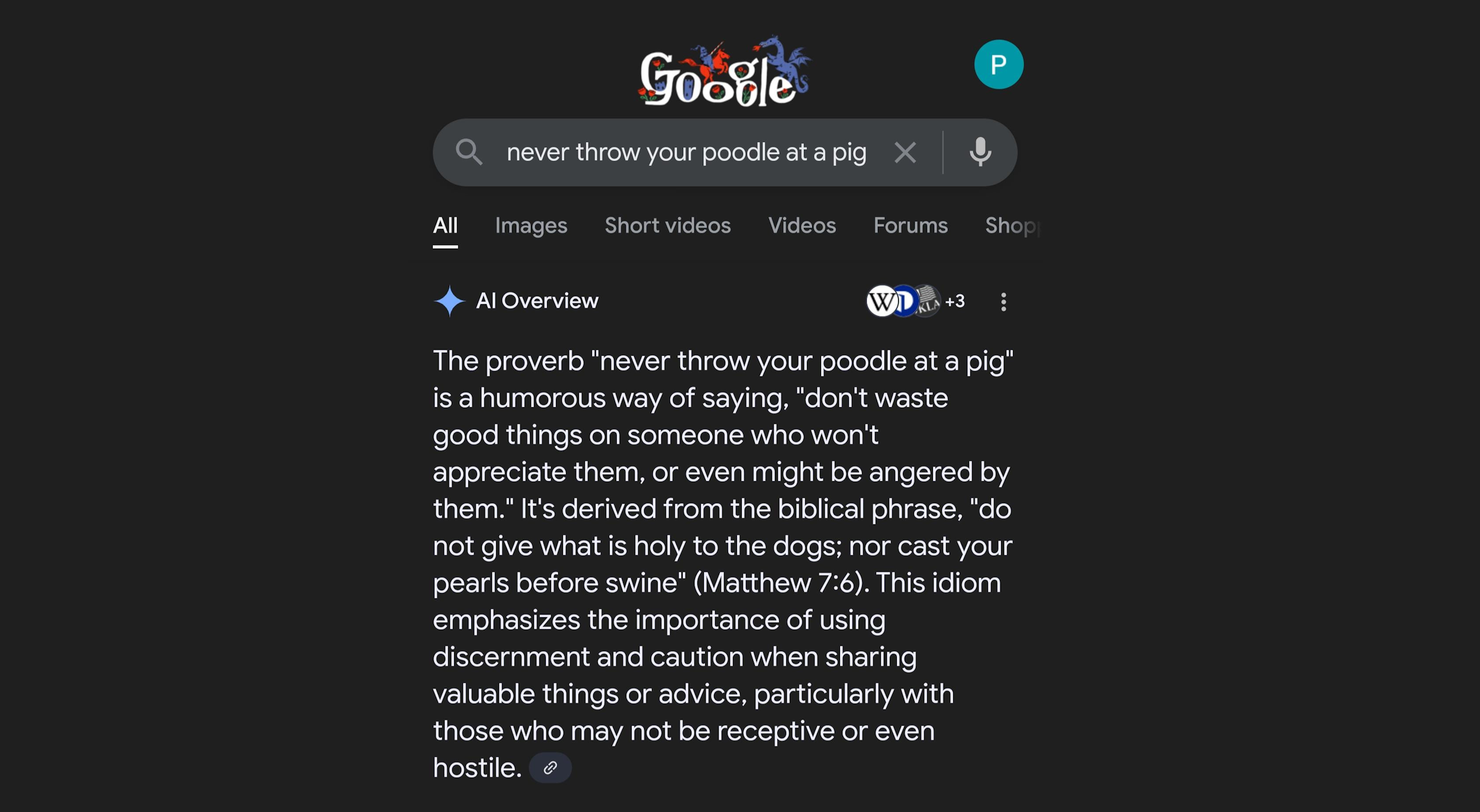

This latest series of AI hallucinations highlights the issue perfectly. In one instance shared by The Sleight Doctor, the AI Overview even cited a Bible verse as the source of this fictional saying. What was the phrase?

“Never throw your poodle at a pig.”