On April 16, 2025, OpenAI unveiled two groundbreaking AI reasoning models—o3 and o4-mini. These innovations mark a notable advancement in the company’s AI technology, particularly highlighted by their enhanced ability to reason with images.

These New Models Can “Think” With Images

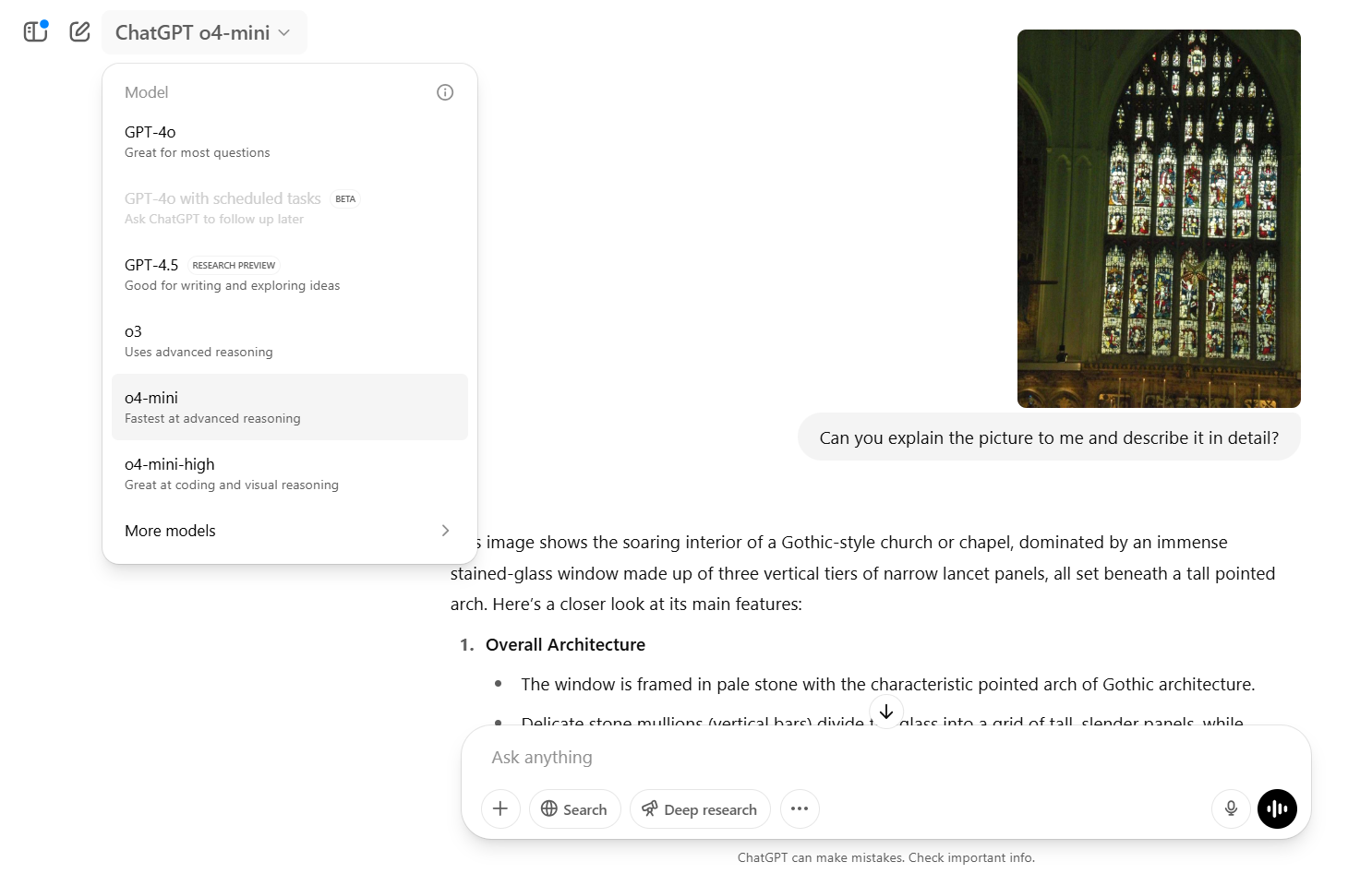

According to OpenAI, these innovative models can analyze any image you upload, whether it’s a whiteboard drawing, textbook illustrations, or graphic PDFs. The announcement for o3 and o4-mini states:

They don’t merely view an image—they engage with it intellectually. This feature introduces a new dimension of problem-solving that integrates both visual and textual reasoning, as demonstrated in their superior performance across multimodal benchmarks.

Image analysis has been incorporated into the models’ chain of thought reasoning. The AI can zoom in, rotate, or crop images to enhance their processing capabilities. Impressively, they perform well even with low-resolution images.

For example, when faced with a scientific problem involving a graphic, the model might focus on a specific detail, perform calculations using Python, and create a graph to illustrate its conclusions.

During reasoning tasks, o3 and o4-mini can utilize all available ChatGPT tools, such as web browsing, running Python code, and generating images, as needed. This autonomous function enables them to select the most suitable tool for a given task automatically. Users and developers can engage in multi-step workflows and address complex tasks with ease.

The o4-mini-high is an advanced version of o4-mini that dedicates more time and computational resources to each prompt to yield superior results. Typical use cases may include:

- Creating and assessing studies in biology, engineering, and other STEM disciplines, providing thorough, step-by-step reasoning accompanied by visual aids.

- Gathering and synthesizing information from diverse sources, such as online databases, financial reports, and market analysis, to derive business insights.

These models have undergone training through reinforcement learning, a significant principle in AI. As a result, they can now tackle more ambiguous problems effectively by determining when to utilize a specific tool to achieve desired outcomes.

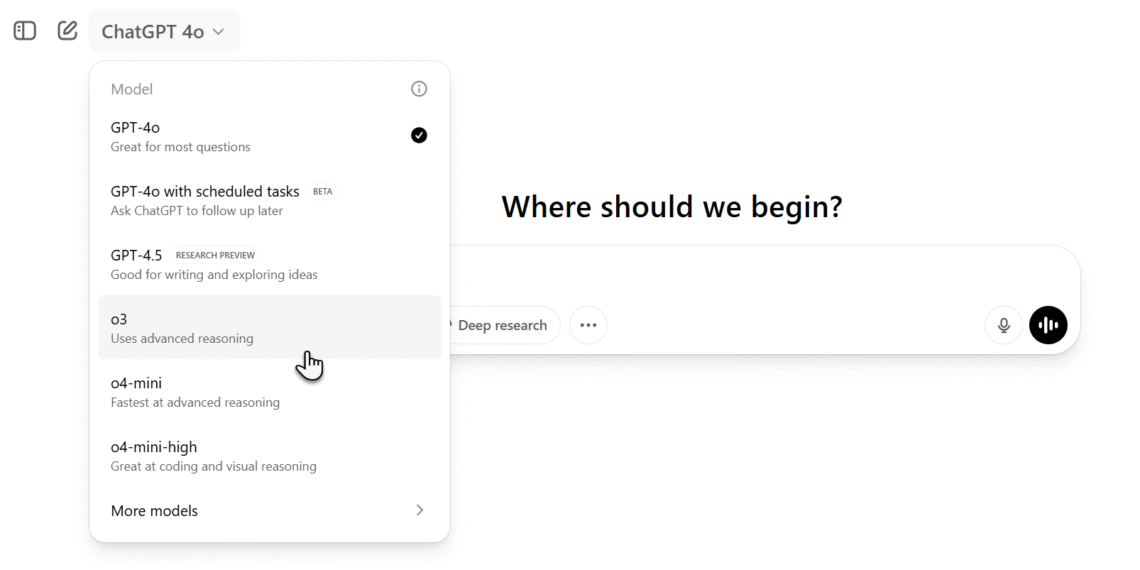

The o3, o4-mini, and o4-mini-high models are accessible to users with ChatGPT Plus, Pro, and Team accounts, with the o3-pro model anticipated to be released shortly. These options can be found in the model selector menu.

Free users can test the o4-mini model by selecting the Think option in the composer before submitting their queries.

Why ChatGPT’s Multimodal Capabilities Could Be Game-Changing

By empowering AI to “think with images,” OpenAI’s latest models are equipped to solve real-world problems that necessitate the interpretation of both text and visuals. This opens new possibilities, such as debugging code from screenshots, reading handwritten notes, analyzing scientific illustrations, and extracting insights from complex graphs. The outcome is a more context-aware version of ChatGPT.

These models are also more self-sufficient. Their ability to match a model to a specific task independently makes them increasingly efficient. With their advanced reasoning capabilities and visual understanding, these autonomous AI agents are poised to play a crucial role in research, business strategy, and creative fields.