ChatGPT-4.5 was touted as a revolutionary advancement, but I found it lacking. OpenAI’s newest AI model has noticeable shortcomings and hasn’t lived up to the expectations set by its marketing.

7

Enhanced Emotional Intelligence, But Not Significantly

A key selling point of GPT-4.5 was its enhanced emotional intelligence. While it does show slight improvement over its predecessor, it’s not enough to make a real difference.

In my interactions with GPT-4.5, conversations still feel somewhat artificial. Although there’s potential for ChatGPT to develop emotional intelligence, I remain skeptical about AI’s ability to authentically showcase this trait.

I’m hopeful that future iterations will enhance performance, but I’m currently underwhelmed by this model’s emotional capabilities. It appears to jump to conclusions instead of taking the time to process and respond to prompts thoughtfully.

6

Fluffy Explanations in GPT-4.5

This version of ChatGPT was supposed to offer more logical reasoning in its responses, addressing a significant gap from earlier updates. However, it still falls short compared to other platforms like Perplexity, which excels in this area.

After using GPT-4.5, I’m not convinced its explanations are any more substantial than before. Often, they feel like unnecessary filler, a mere expansion of words that can be frustrating rather than helpful.

Others have reported similar issues, noting that the software sometimes repeats phrases unnecessarily. A straightforward solution would be for ChatGPT to provide explanations followed by relevant follow-up questions, a feature its Deep Research tool excels at.

5

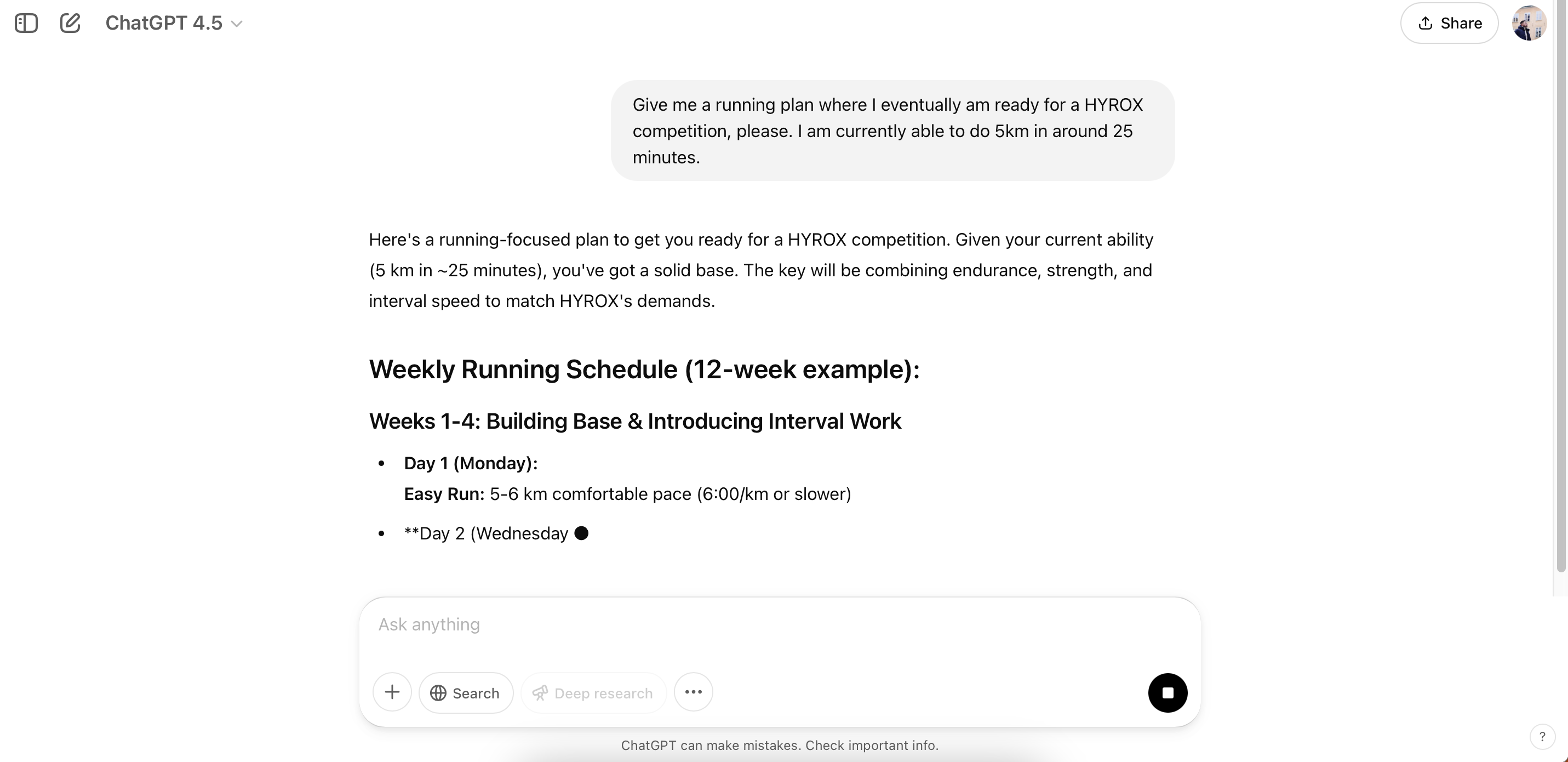

Overlooking My Requests

From my first interactions with ChatGPT, I’ve noticed the app frequently misses the nuances of my requests. I hoped these issues would be mitigated with GPT-4.5, but it seems they persist.

In this version, the AI often overlooks key points, resulting in generic responses that miss the essence of my queries. This forces me to rephrase my prompts, which can be quite frustrating.

To improve, I believe GPT-4.5 needs to engage in deeper analysis of my inputs. While I acknowledge that I could refine my approach by using specific prompts, it would help if the AI focused more closely on the most relevant parts of my requests.

4

Challenges with Longer Texts

Discussions around ChatGPT’s writing ability have persisted since its launch, and personally, I’ve never found it particularly impressive. Perhaps my background as a writer colors my perspective, but AI-generated content often lacks depth and authenticity.

That said, I’m open to new technologies and was curious to see how well ChatGPT could handle longer narratives. While it performs better with shorter texts, my experience shows that it struggles with extended writing assignments. Many others feel similarly about ChatGPT’s long-form capabilities.

With GPT-4.5, the writing still feels too structured and formulaic for longer pieces. Its misinterpretation of prompts sometimes requires me to rewrite them entirely. Additionally, while it’s more proficient with factual writing, it falls short when tasked with creative writing, as illustrated in the example below, which lacks any real substance.

Perhaps my opinion will shift with future updates, but I’m hesitant to rely on ChatGPT for my writing at this point. Enhancements would require more human insights and a wider context for the AI to work with.

3

Usage Limits Hinder Experimentation

Similar to ChatGPT’s Deep Research tool or using Sora for AI video projects, GPT-4.5 has imposed usage restrictions. While not explicitly stated, it seems to allow around 45-50 prompts based on my experience.

As a daily user of ChatGPT primarily for experimentation, I’ve enjoyed the unlimited opportunities offered by earlier versions. However, these new limits can stifle exploration and creativity.

I hope that these usage caps will be lifted eventually, but they’re quite frustrating for now. An easy fix would be to increase the limits or offer unlimited prompts for everyone. A simple notification indicating “X remaining” could also alleviate concerns until this is addressed.

2

Responses from GPT-4.5 Are Unpredictable

While GPT-4.0 isn’t flawless, its responses tend to be relatively consistent. Before submitting a prompt, I generally have a decent idea of whether I’ll need to revise it later. Unfortunately, this consistency is not present in GPT-4.5.

Some of its replies are quite good, while others fall flat. This inconsistency resembles issues I’ve experienced with DALL-E, OpenAI’s image generation model, which manages simpler requests better but struggles with more complex ones. While Deep Research aims to resolve this, it shares the same prompt limitations, and using it can feel excessive for straightforward interactions.

I recognize that features like emotional intelligence can take time to develop. Moving forward, the software needs to find a balance between mimicking human interaction and genuinely grasping the nuances of conversation. This would help it leverage the best aspects of GPT-4.

1

Problems with File Uploads

This aspect represents more of a technical glitch than a prompting issue, but I’ve faced challenges uploading files while using GPT-4.5. These are precisely the moments when the model’s supposed superior reasoning capabilities should ideally excel, so encountering problems is aggravating.

I encountered similar issues with Sora, where the app failed to recognize my existing ChatGPT Plus subscription upon sign-in. I trust that the file upload problem will eventually be resolved, and the sooner, the better.

While I understand that GPT-4.5 still has room for improvement, I’m currently disappointed in its capabilities. Like previous models from OpenAI, I expect these issues to be ironed out before too long, but GPT-4.5 won’t be my first choice for now.