The Privacy Risks of AI Chatbots

AI chatbots, such as ChatGPT and Google’s Gemini, have become increasingly popular due to their ability to generate responses that resemble human conversation. However, we must acknowledge that these technologies have their limitations. As our reliance on AI grows, it is essential to recognize that certain pieces of information are better kept confidential and should never be shared with AI chatbots.

Understanding the Privacy Challenges

While AI chatbots offer impressive capabilities, they operate based on large language models (LLMs) that raise significant privacy and security concerns. Here are a few of the key issues surrounding the use of these technologies:

-

Data Collection Practices: Chatbots rely on extensive datasets, including user interactions, for training purposes. Companies like OpenAI provide options to opt out of data collection, but achieving full privacy can be complex.

ADVERTISEMENT -

Server Vulnerabilities: User data stored on servers can be at risk from hacking attempts, leading to potential misuse of personal information by cybercriminals.

-

Third-Party Access: Interactions with chatbots may be shared with third-party vendors or accessed by authorized personnel, increasing the chances of data breaches.

-

Misleading Claims: While companies assert that they don’t sell user data for marketing, this information may still be shared for system maintenance or operational reasons.

ADVERTISEMENT - Generative AI Risks: As generative AI becomes more prevalent, critics argue that security and privacy risks could be amplified.

If you want to safeguard your data when using tools like ChatGPT, it’s crucial to understand these privacy issues. While companies strive for transparency, the intricacies of data sharing and security flaws warrant a cautious approach.

To maintain your privacy and security, here are five types of information you should avoid sharing with generative AI chatbots.

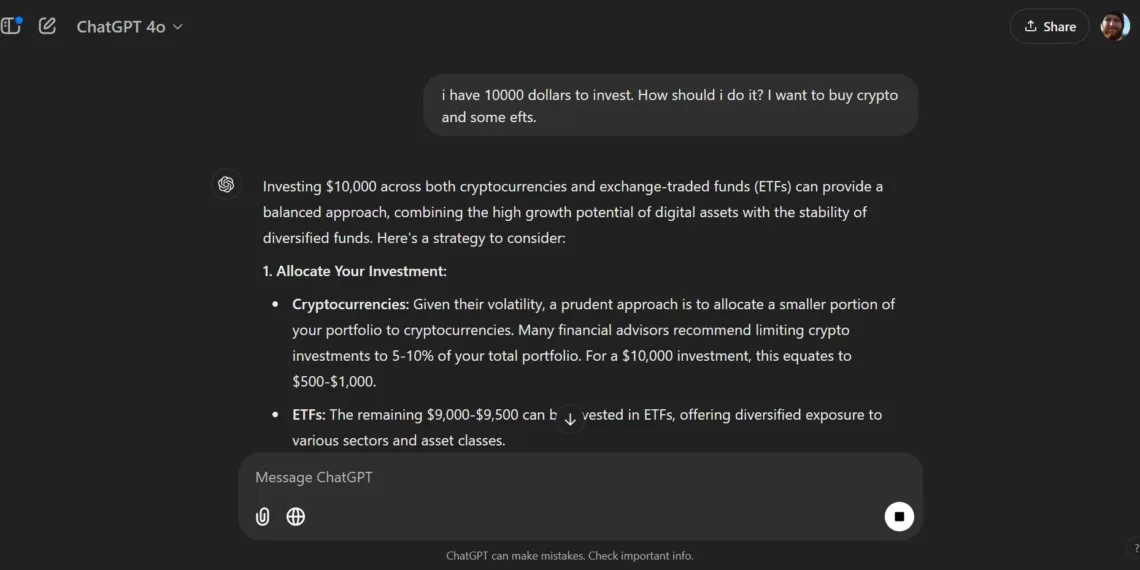

1. Financial Information

As AI chatbots are increasingly used for financial advice and personal finance management, it’s vital to recognize the risks associated with disclosing financial information.

Using chatbots as financial advisors could expose your sensitive financial data to cybercriminals looking to exploit it. Even if companies claim to anonymize conversation data, it may still be accessible to third parties or certain employees. For example, a chatbot might analyze your spending patterns for advice, but if this information falls into the wrong hands, it could lead to targeted scams, such as phishing attempts disguised as official bank communications.

To keep your financial information secure, limit discussions with AI chatbots to general topics. Sharing specific details like account numbers, transaction histories, or passwords can make you susceptible to fraud. If you need tailored financial advice, working with a licensed financial advisor is a safer route.

2. Personal and Sensitive Thoughts

Many individuals turn to AI chatbots for emotional support or therapy, often overlooking the potential risks to their mental health.

It’s important to understand that AI chatbots can only provide generic responses to mental health inquiries and lack the ability to offer personalized care. This limitation means any advice or recommendations they provide may not suit your unique situation, possibly harming your well-being.

Additionally, revealing personal thoughts to chatbots poses serious privacy threats. Your intimate reflections could be leaked or utilized as training data, leading to potential exploitation by malicious actors. To protect your privacy, view AI chatbots as tools for general information and support, not substitutes for professional therapy. If you need mental health assistance, consult a qualified professional who prioritizes your privacy and provides tailored advice.

3. Confidential Professional Information

Avoid sharing sensitive workplace information with AI chatbots. Many major companies, such as Apple and Google, have prohibited employees from using these tools at work due to data security concerns.

For instance, there was an incident where Samsung employees unintentionally uploaded sensitive code to ChatGPT, resulting in the exposure of confidential company information and prompting a ban on AI chatbot use at the company. Utilizing AI for coding solutions or any professional challenges can lead to unintended leaks of sensitive data.

4. Passwords

Under no circumstances should you share your passwords with AI chatbots. These models store data on servers, and disclosing your passwords can compromise your security.

A significant breach involving ChatGPT in May 2022 raised alarms about the platform’s security. Although the ban on ChatGPT in Italy has since been lifted, it serves as a reminder that data breaches can happen even with enhanced security measures.

To protect your login credentials, never share them with chatbots. Use dedicated password managers or your organization’s secure IT protocols for managing your passwords.

5. Personal Identifiable Information

Just like on social media, never share personally identifiable information (PII) with an AI chatbot. PII includes sensitive details such as your home address, social security number, date of birth, and health data, which can be used to identify you. Mentioning your address while inquiring about local services could inadvertently expose you to risks such as identity theft.

To maintain your data privacy when interacting with AI chatbots, consider the following practices:

- Review the privacy policies of chatbots to understand the associated risks.

- Refrain from asking questions that might reveal personal information.

- Avoid sharing medical information with AI tools.

- Be cautious of the potential vulnerabilities of your data on social platforms that integrate AI.

AI chatbots offer impressive benefits, but they also come with serious privacy risks. Protecting your personal data when using AI tools like ChatGPT or others is manageable. Just take a moment to think about the potential consequences of sharing certain information, and you’ll quickly discern what’s appropriate to discuss and what should remain private.